Bringing machine learning into healthcare is like giving doctors a superpower. The impressive growth expected in the global AI ML (Artificial Intelligence/Machine Learning) in global healthcare from 2023-2030 market is 37.5% CAGR.

The healthcare industry has always been at the forefront of adopting cutting-edge technologies, and the integration of machine learning (ML) has opened up a plethora of transformative possibilities.

With all the data that medical systems have on patients, machine learning can help them spot trends and patterns that might be impossible for doctors to analyse.

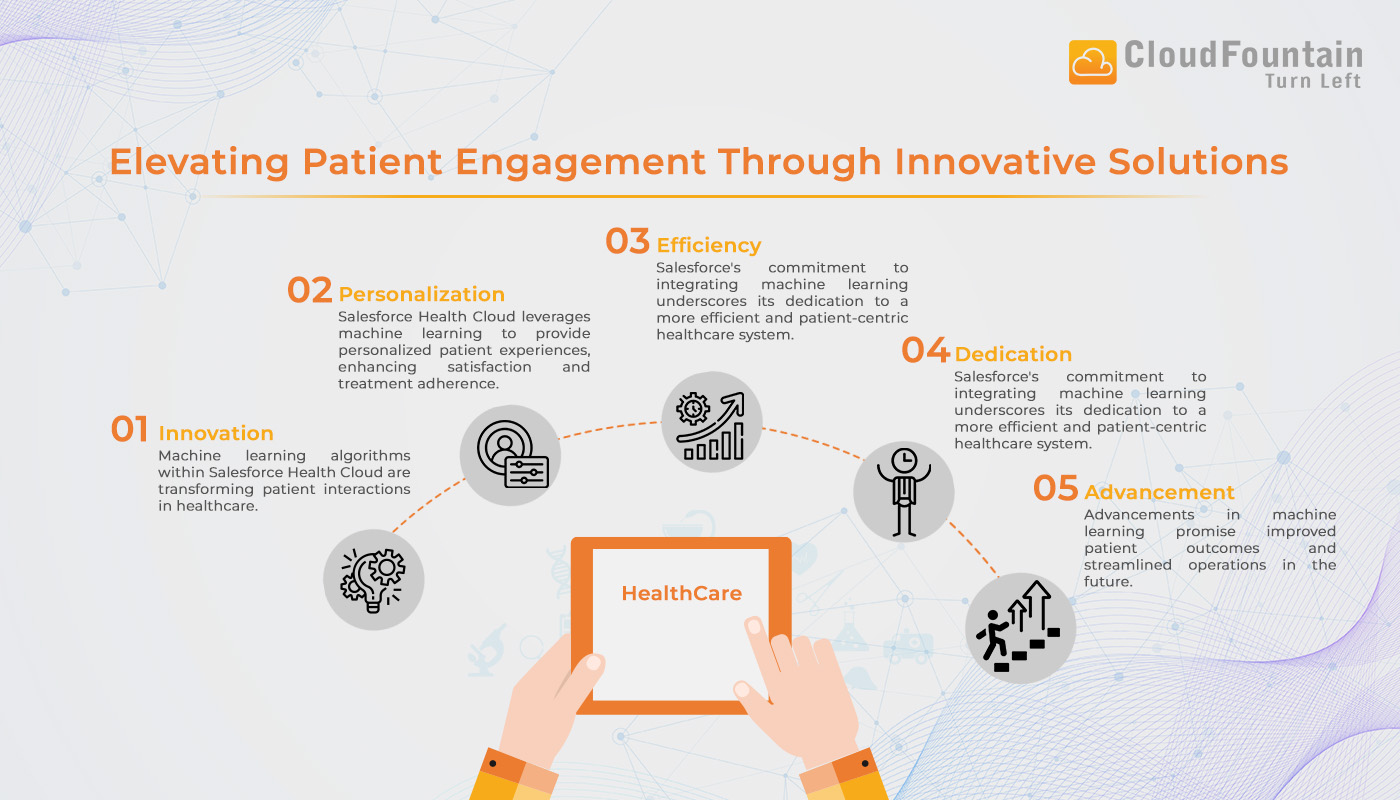

In recent years, Salesforce has transcended its traditional role in customer relationship management (CRM) to become a pivotal force in healthcare. By harnessing its advanced ML capabilities, Salesforce is revolutionising patient care delivery, operational management, and patient engagement.

This blog post delves into the current trends and insights, showcasing how Salesforce’s machine learning innovations are reshaping the healthcare landscape.

How does Salesforce enhance Futuristic Healthcare Interaction?

Machine learning algorithms deliver personalised patient experiences. By analysing patient data, including medical history, preferences, and behaviours, healthcare providers can tailor communications and care plans to individual needs. This personalization increases patient satisfaction and adherence to treatment plans.

Personalized Patient Interactions

Salesforce Health Cloud leverages machine learning to provide personalised patient experiences. By analysing patient data, including medical history, preferences, and behaviours, healthcare providers can customise communications and care plans to suit individual needs. This tailored approach enhances patient satisfaction and improves adherence to treatment plans.

In addition, Salesforce Health Cloud offers a comprehensive CRM platform specifically designed for healthcare providers, payers, and pharmaceutical companies. It encompasses features that harness machine learning to enhance healthcare delivery, such as:

- Centralised patient data management for a holistic view

- Facilitation of care team collaboration for coordinated care

- Personalised patient engagement through communication and reminders

- Predictive analytics-driven identification of high-risk patients for proactive management.

Salesforce’s incorporation of machine learning into these capabilities underscores its dedication to revolutionising healthcare. By improving patient engagement, personalising interactions, and employing predictive analytics.

Predictive Patient Outreach

Predictive Patient Outreach utilises predictive analytics powered by machine learning to anticipate patient deterioration, risks of hospital readmission, and potential complications. This foresight enables healthcare providers to implement preventative measures, ultimately improving patient outcomes and reducing healthcare costs.

Salesforce offers a suite of capabilities that enable healthcare organisations to conduct effective predictive patient outreach. With Salesforce Health Cloud, comprehensive patient data is aggregated, allowing machine learning algorithms to analyse patterns and trends. This analysis empowers healthcare providers to identify patients at risk or in need of follow-up care.

Additionally, Salesforce Einstein Analytics integrates advanced analytics and AI capabilities to provide deep insights and predictive analytics. These capabilities include predictive analytics, which uses machine learning to forecast patient outcomes and identify at-risk individuals, as well as operational efficiency analysis to optimize resource allocation, improve workflow efficiency, and reduce costs. Furthermore, clinical data analysis provides insights from clinical data to enhance diagnostic accuracy and treatment efficacy.

Automating Administrative Tasks for Seamless Operations

Administrative tasks, such as appointment scheduling, billing, and insurance claims processing, can be automated using machine learning. Natural Language Processing (NLP) algorithms can handle patient queries and documentation, freeing up healthcare professionals to focus on direct patient care. For example, voice-to-text transcription services can convert doctors’ notes into electronic health records (EHRs) in real-time, enhancing efficiency and accuracy.

Fortunately, Salesforce offers a variety of tools that help streamline administrative tasks and optimise resource allocation.

- Automating Administrative Tasks with Salesforce Health Cloud: Salesforce Health Cloud, healthcare providers can automate routine administrative tasks like appointment scheduling and patient registration. This not only saves time but also reduces the risk of errors, allowing staff to focus more on delivering excellent care to patients.

- Streamlining Patient Inquiries with Salesforce Service Cloud: Salesforce Service Cloud provides case management features that automate the handling of patient inquiries and requests. Through intelligent routing, cases are directed to the right staff members for quick resolution. Plus, self-service portals empower patients to access information and schedule appointments independently, further easing the administrative burden on staff.

- Forecasting Patient Demand with Salesforce Einstein Analytics: With Salesforce Einstein Analytics, healthcare organisations can gain valuable insights into patient demand for services. Predictive analytics models forecast future demand, enabling providers to allocate resources more effectively. Real-time data monitoring allows for proactive decision-making to address resource shortages or surpluses as they arise.

- Managing Healthcare Data with Machine Learning: Machine learning plays a vital role in managing and analysing the ever-expanding volume of healthcare data. With efficient data management systems, healthcare organisations can integrate and analyse data from diverse sources, yielding comprehensive patient insights crucial for large-scale health initiatives and research projects.

- Optimising Resource Allocation with Predictive Models: Salesforce offers solutions to optimise resource allocation by leveraging historical data and current trends. Through predictive models, Salesforce assists healthcare organisations in forecasting demand for services, ensuring optimal utilisation of staffing, equipment, and inventory.

- Seamless Integration with Salesforce MuleSoft: Moreover, Salesforce MuleSoft facilitates seamless integration of various healthcare systems and data sources. By automating data retrieval and sharing processes, MuleSoft reduces manual errors and ensures that information from electronic health records and other sources is readily accessible and actionable.

Also Read: Benefits Of MuleSoft Anypoint Platform

Furthermore, the Salesforce AppExchange provides a plethora of third-party applications tailored to healthcare needs. These tools, such as workflow automation and document management systems, streamline administrative processes like documentation and reporting, allowing healthcare staff to dedicate more time to patient care.

By harnessing these Salesforce capabilities, healthcare organisations can enhance operational efficiency, streamline workflows, and ultimately deliver superior patient care.

Pioneering Drug Development through Data Insights

Salesforce’s machine learning tools play a pivotal role in accelerating drug development and enhancing clinical trials.

By aggregating and analysing data from various sources, including clinical trials and genomic research, Salesforce facilitates faster and more efficient identification of promising drug candidates.

This expedites the research phase, bringing new treatments to market more rapidly. In addition, machine learning algorithms improve the design and execution of clinical trials by identifying suitable participants, predicting outcomes, and monitoring progress in real-time.

Salesforce Health Cloud’s capability to integrate and analyse diverse datasets ensures that trials are more efficient and have higher success rates. Furthermore, Salesforce Tableau offers powerful data visualisation and business intelligence capabilities. It creates interactive and intuitive dashboards to visualise healthcare data, enabling healthcare providers to explore insights and trends for informed decision-making. Additionally, Tableau generates comprehensive reports to track performance metrics and outcomes, further enhancing the efficiency and effectiveness of drug development and clinical trials.

Addressing Challenges and Ethical Considerations

Data Privacy and Security are paramount concerns in the era of big data, especially in healthcare where sensitive patient information is involved. Partnering with CloudFountain helps healthcare organisations to prioritise compliance with regulations. Here is how Cloudfountion can help you with the compliances:

- GDPR and HIPAA implement robust security measures to safeguard patient data. Machine learning algorithms further enhance security by detecting and responding to potential threats.

- Our seasoned experts guide your digital transformation journey strategically.

- Prioritising budget control while keeping your business at the forefront of digital advancement.

Choosing CloudFountain’s consulting services in Boston, USA helps you to partner with an innovative and reliable ally.

Also Read: HIPAA Compliance In Healthcare: Best Practices And Requirements

The Future of Salesforce Machine Learning in Healthcare

The future of Salesforce machine learning in healthcare is promising, with continuous advancements expected to bring even more sophisticated applications. These may include advanced telemedicine services, enhanced patient monitoring through IoT integration, and the development of more personalised treatment plans. Salesforce’s commitment to innovation, coupled with its strong emphasis on ethical practices, positions it as a leader in the healthcare technology landscape.

Furthermore, Salesforce offers additional capabilities through its various platforms, extending its reach in healthcare:

- Salesforce AppExchange: Offers a variety of third-party applications tailored for healthcare, including EHR integrations for seamless data exchange, telehealth solutions enabling remote consultations, and health and wellness apps supporting chronic disease management.

- Salesforce MuleSoft: Facilitates integration of diverse healthcare systems and data sources, enabling data integration, API management for secure data exchange, and workflow automation to improve efficiency.

- Salesforce Community Cloud: Fosters collaboration and communication among patients, providers, and caregivers through patient communities, provider collaboration tools, and knowledge-sharing platforms.

- Salesforce Service Cloud: Enhances patient support and care management with features like case management, omni-channel support, and self-service portals for patients.

- Salesforce IoT: Connects and analyses data from medical devices and wearables for real-time monitoring, proactive alerts, and data integration with EHRs for a comprehensive view of patient health.

- Salesforce Tableau: Delivers powerful data visualisation and business intelligence capabilities, enabling healthcare providers to create interactive dashboards, explore data insights, and generate comprehensive reports for informed decision-making.

These Salesforce capabilities play a vital role in transforming the healthcare industry, enhancing patient care, improving operational efficiency, and enabling data-driven decision-making.

How Can You Integrate Salesforce Into Your Healthcare Systems?

CloudFountain stands out as a leading provider of Salesforce Integration Solutions in Boston, USA. Our team comprises seasoned and qualified Salesforce personnel with expertise in developing and integrating Salesforce modules. There are compelling reasons to choose us:

- We meticulously scrutinise the right Salesforce alternatives tailored to your specific needs.

- Our implementation process is precise and accurate, ensuring seamless integration and functionality.

- We guarantee the effectiveness of the applications we develop, leveraging our industrial experience and expertise.

- With our ongoing support and service, we ensure that your Salesforce solutions continue to meet your evolving needs.

With CloudFountain as your trusted partner, you can rest assured that your Salesforce integration needs are in capable hands, driving your organisation towards greater efficiency, effectiveness, and success in the healthcare domain.

Final Thought

Machine learning revolutionises healthcare, promising improved patient care and cost reduction. Yet, ethical and privacy challenges must be addressed. Salesforce’s machine learning advancements enhance patient engagement, clinical support, operations, and drug development. Integrating these technologies can boost outcomes and efficiency. CloudFountain, a premier Salesforce Integration Company in Boston USA, offers tailored solutions with precise implementation and ongoing support. With a focus on effectiveness and expertise, we scrutinise alternatives, ensuring seamless integration and optimal application. Choose CloudFountain for transformative Salesforce integration, driving efficiency and success in healthcare.